Restoring trust in awarding exam grades: the case for a Personal Exam Grade Explainer

Blog Campaign / December 4, 2021

As the autumn term draws to a close, we are reflecting again on how exam candidates will trust in 2022 that a grade is accurate and fair. We have proposed that candidates need to have access to understandable information that has gone into its calculation.

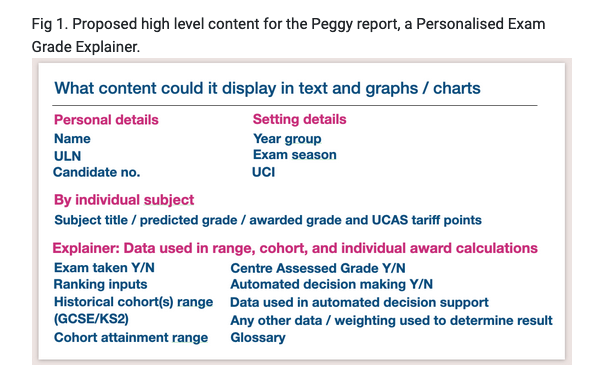

Every candidate should receive a Personal Exam Grade Explainer (shortened to P.E.G.E, that we’ll call “Peggy”). An individual report for each result. Peggy would help transparently explain how *my* grade was calculated, not provide a generic description of the awarding process as materials do today.

Young people felt robbed of agency when they were told their future had been administered by an algorithm in 2020. And some in 2021 felt robbed of showing in an exams that they could do better than teachers thought they were capable of when TAGs were used. Neither situation will be resolved entirely even if 2022 were to be a return to full exams, or claim to be algorithm free. The exams are not normative nor truly criterion referenced, and there’s also a comparable outcomes model on top. Who understands it really?

Explainability

Every candidate is *already* given a results slip today with some personal details and the grade. Using the same delivery mechanism, the report would also explain how each grade was reached for the candidate, making the data inputs transparent and understandable. Some candidates are still children aged under 18, and today they could argue that bodies do not meet their existing obligations under UK data protection law and the GDPR Articles 13 and 14 right to be informed and about the use of automated decision making (or the comparable outcomes model).

“Given that children merit specific protection, any information and communication, where processing is addressed to a child, should be in such a clear and plain language that the child can easily understand.” (recital 58, the GDPR)

Automated decision making and profiling

The 2020 fall out will be lasting in the lives of thousands of affected students. It may also have a lasting effect in shaping their attitudes towards AI, after their experience of what the PM called ‘a mutant algorithm‘ and it all cost not one, but two Ofqual executives their positions.

The explanation of any automated decision making or inputs, should be simple, short, using text and graphics. Peggy might include a chart of the subject grade boundaries, a table of how comparable outcomes were determined, the centre‘s grade range and explain the effect of historic cohort data from previous results. Cohort Key Stage 2 SATs results or GCSEs used in the model, as well as any individual test or competency scores could be included. It would set out how each of those sets of data affected your individual result. A glossary and explanation of how the appeals and resit process both work could be offered as today.

Peggy would help exam boards meet their lawful obligations to explain their personal data processing and any automated decision making, help educational settings meet obligations to explain how personal data are used and ensure data accuracy. It should help expose any input errors more quickly. It would better support schools and candidates to understand how close they were to a grade boundary, supporting a faster decision process for candidates on whether it likely a grade would change after an appeal and remark, or on re-taking the exam. And overall, increased transparency should help to restore trust in the awarding process.

Going forwards

This will take collaboration and cooperation across Exam Boards, perhaps with with the JCQ and Federation of Awarding Bodies. It needs designed with a broad range of input and thorough testing. It might not start with every type of exam. This year, it might have had fewer inputs than usual but even with only TAGs (Teacher Assessed Grades) the inputs used could have been set out for each student. Candidates should be offered a consistent standard of explainability and model in its design, and should not need to navigate different formats just because their exams have been spread across a range of providers.

We suggested it already in 2020. We think Ofqual should begin work with the exam boards and JCQ with urgency, and we welcome others’ views.