NetSupport DNA complaint

legislation Prevent safeguarding / December 19, 2018

On Sunday December 18, the Sunday Telegraph published a story broadly about classroom management software, and specifically mentioned two separate companies. The reported 2015 flaws in Impero, and NetSupportDNA whose Marketing Manager was quoted, together with a quote from our Director after being asked for comment.

While we already responded to the article on Sunday with a blog which was broadly generic, we are more than happy to respond again, and clarify some of the subjects the Sunday Telegraph article raised from their own investigation, and to provide more information about the NetSupport company product, agreed in wording with them.

NetSupport asked the newspaper to take down their article, and they have. NetSupport also asked us to take down our blog, which we have declined seeing the request as without grounds, although we offered to make any factual corrections to it instead, if needed.

They have not asked us for any corrections in this, or in any of our work to date.

We are very happy to post this agreed statement, as it is a subject close to our heart, and we welcome the opportunity to work more closely with the industry, and continue the amicable and professional conversation:

“We have been informed by NetSupport that the Sunday Telegraph article published on December 16, 2018, to which this blog responded, was taken down today at the request of NetSupport Group, following their IPSO complaint. While our response was broadly generic, we are happy to update our reply with specific regard to the NetSupportDNA education classroom management ‘safeguarding in schools’ software product, and the wording of this has been reviewed and approved (and where enlarged upon, noted in brackets) by NetSupport.

1. Regards remote webcam operation: This feature is disabled by default. Whether this software feature is then enabled, is a decision taken by the school. This decision is not made by the company. This decision is not made by the child / computer user, or their parent.

2. When an image may be taken: If a critical safeguarding keyword is copied, typed or searched for across the school network, NetSupport DNA’s webcam capture feature can capture an image of the user (not a recording/video) who has triggered the keyword. Since the solution is currently only running on a LAN (Local Area Network), the feature can only apply within the school, not at home. (NetSupport added: future plans will NOT at any time include the ability to capture the webcam on a device outside of school.)

3. However, triggers and flags can be created outside of school hours, through for example the caching of keywords, which then create the trigger when the device is brought back into school. Out of hours triggers created at home may therefore be captured by the school processing, after the event.

4. Regards the image and data created as a result: After NetSupport DNA’s webcam capture feature has captured an image of the user, the image and any related incident data are processed in the usual safeguarding process and manner determined by the school. These may feed into the Channel / Prevent programme as and when appropriate following the school policy. NetSupport DNA is not responsible for the onward processing in further systems or by further third parties. (NetSupport further added: “To be clear as a locally installed product, NetSupport has no access to the system or its content at any point.”)

5. Regards web monitoring, screen content is recorded all the time (NetSupport further added: “in non-viewable device memory”), so that when a search term or word triggers a flag, screen content from 15 seconds before the trigger word can be stored alongside the incident for context.”

– end of text approved by NetSupportDNA, image clip: NSDNA website –

Update: we see this morning that a helpful blog post on their site yesterday explains how a minute’s worth of screen content is captured on a rollling basis. Source: NetSupportDNA December 18, 2018, Blog: How does NetSupport DNA capture safeguarding events?

“How does the screen video recording work?

Much like a CCTV system that has a rolling hard disk, there is a rolling 60 second capture of screen which is only held in the system memory on the student’s PC and is not committed to file unless the keyword is triggered. This means there is no footage visible to anybody and its automatically deleted as it goes round in an encrypted form. Only if a critical keyword is triggered, would that then be converted into a viewable file of 60 seconds of raw footage.” [end quote]

The Telegraph takedown

The Telegraph responded to NetSupport’s IPSO complaint, withdrawing the article: A 16 December article (“School pupils being spied on with webcams”) wrongly stated that NetSupport’s classroom software allows the company to “remotely enable webcams to spy on pupils”. NetSupport has told us, and we accept, that all data is in fact stored on schools’ own networks, not in the cloud or on servers operated by NetSupport, which is unable to access or control pupils’ webcams in any way. Nor can the software record video of students or send their details to police, as the article also wrongly asserted. We apologise for these errors.

Our Comment

We hope NetSupport is happy to have another opportunity to clarify their school software capability.

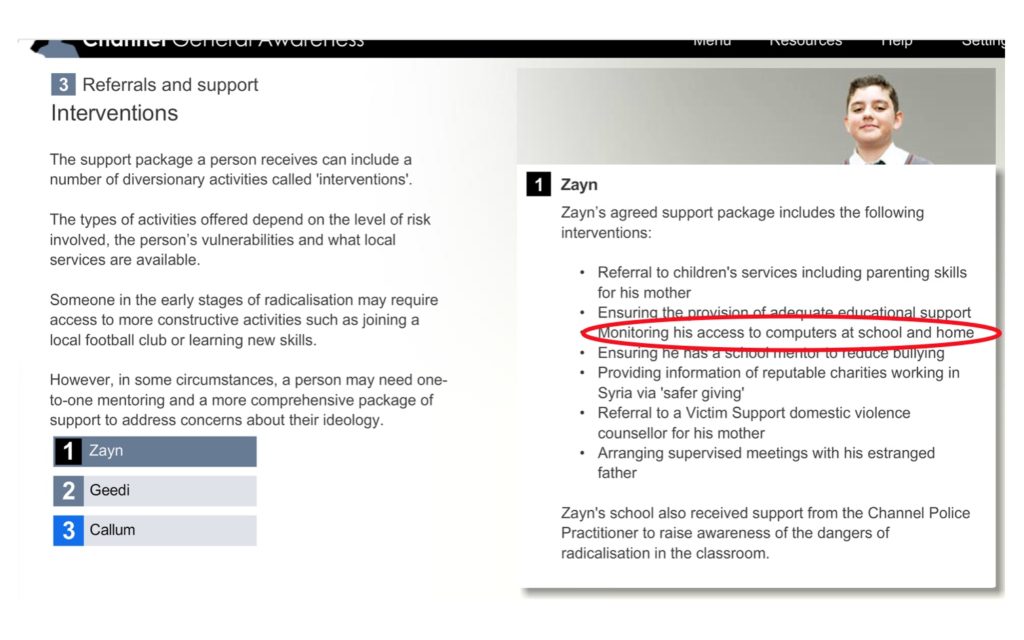

We welcome NetSupport’s willingness to meet and look forward to discuss with them in January, as well as new found engagement from other similar providers, as we seek the support for sector-wide improvements needed. We are especially keen to research the differences between products in more depth, and share the concerns we receive from staff and children wrongly flagged as ‘at risk of suicide’ for looking up cliffs online, or as a potential gang member for searching for the animals, ‘black rhinos’. Those who have been pranked by friends; flagged in transitions from old-to-new system introductions with what appear to be overly-sensitive watchlists; overblocking content access of violence in Shakespeare texts, children who are ending up in front of panels and senior staff to explain themselves, for nothing — and sometimes with lasting consequences for the child. The sector can fix these issues by improved joint working.

There are over 15 main suppliers of such systems to UK schools with a variety of different capabilities and policies, and further local decisions are made on implementation. We have sought engagement from many of the providers in the sector, including NetSupport Group for over a year, and at various times in the last 12 months. For example, asking for pre-publication input and comment on the report we wrote and published in May this year. We will also update this research topic in early 2019 in our next State of Data report.

This is a challenging, complex area of data processing in the public sector, conflating important areas of safeguarding, and child protection, with the politics of Prevent. It conflates age-old issues of bullying and social issues, with old and new issues of technology, outsourcing more commercial service providers in state schools and associated aspects of monopolies, mergers and competition, and opaque data practices.

We hope children will soon also be afforded the same opportunity consistently to have their concerns heard, when information is recorded about them through such tools, and to ask to be able to see, and for correction of their records. We would like to see published rates of false flags, mistakes, common standards of statistical reporting especially where using non-English language and slang; on ethnicity, BYOD policies, and routes of redress for errors and their consequences. All this needs made clear.

The consequences of what happens after these many different systems trigger keywords is often entirely opaque to families, as our poll of parents in February 2018 showed, and we highlighted in this week’s blog. A poll of parents commissioned by defenddigitalme in February 2018 through Survation showed that 86% of parents with children in England’s state education system think that both children and parents should be informed of what the consequences are if these keywords are searched for, and 69% believe children should be told what these thousands of keywords are.

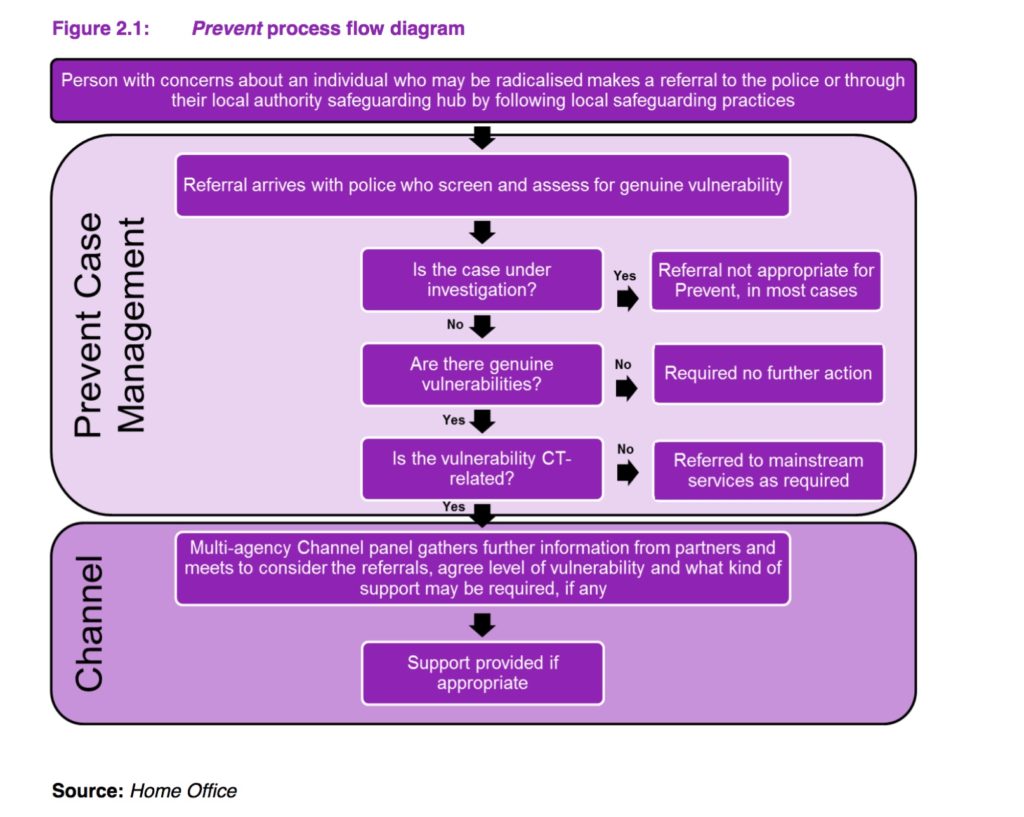

Safeguarding-in-schools generated information, may be the trigger for staff to begin a Channel referral for children into the Prevent programme. 1 in 3 of all referrals come from the education sector today. The Home Office diagram of process flows below, set out the process, which starts with a Channel police practitioner screening the children referred.

70% of referrals into the Channel programme in 2017-18, resulted in no action. Only 394 individuals received Channel support after the initial Channel panel discussion.

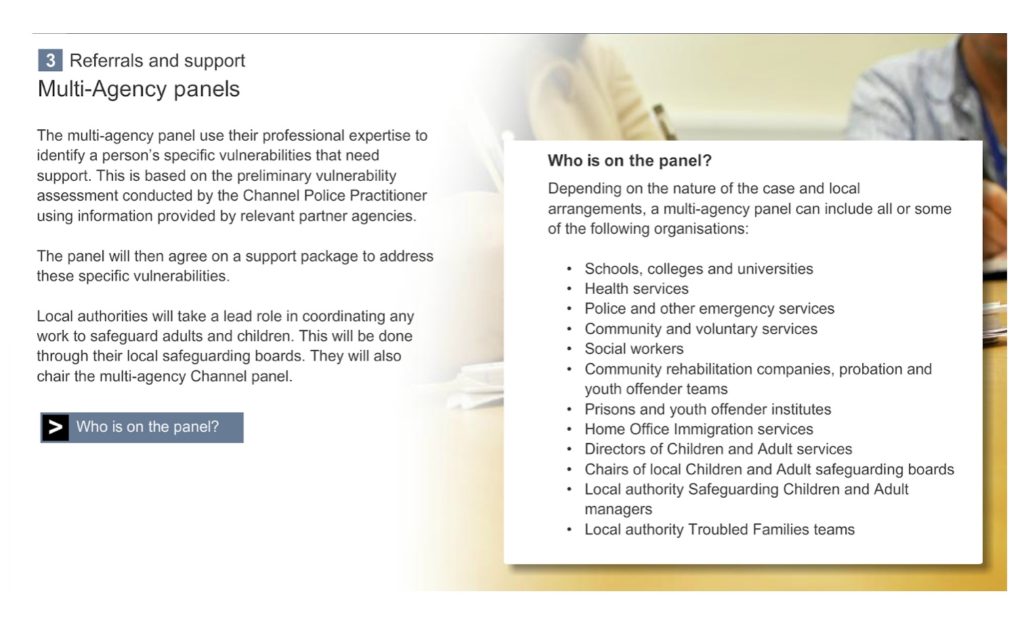

MASH (Multi-Agency-Safeguarding-Hubs) can be secretive, and some have refused to disclose for example, even the privacy policy given to families, or total numbers of children involved, although Channel participation is described as consensual and voluntary.

MASH policies can mean that data about a child once it leaves school, may be shared across a wide variety of other agencies, and in their systems. Errors can therefore have a multiplying effect, and seeking fixes or redress is hard. Policies have been imposed without public consultation in schools. We are keen to bring clarity and consistency in related data handling for staff, children and families who raise concerns with us, given the very real consequences. Changes need underpinned by human rights’ centric data principles, of safe, fair and transparent processes. For example, to see families given fair explanations where screen content from every child is always recorded, even without triggering incidents — and consistent explanations on where and how that screen content is stored, or turned from “non-viewable” into ‘viewable’ upon an incident, and what that really means.

Numbers gathered by CRIN (Child Rights International Network) via Freedom of Information requests show that between March 2014 and April 2016, (with and without intervention after the Channel panel) 18.6% of the total referrals were Muslim children, and of all the children referred, Muslim children were disproportionately represented at 38%. Over-referrals among children needing no action is a clear issue.

This is why we are calling for action to review school safeguarding software and Prevent policy, and much more transparency and oversight, especially as creeping capability of commercial tools include surveillance of children at home, by intended design or not. A call we set out on our blog on December 16, and which we’ll not be taking down.